Ibuildproductsystems.

Founding engineer crafting AI-native products across mobile, web, data, and operations.

Selected work

Shipped systems across domains

Outcome-focused systems built across web, mobile, data, and AI.

I handle UX, backend, data, and AI to get a startup to its first real users.

Revage

01Longevity platform blending wearables, daily tracking, and AI guidance.

- Turned raw health signals into a clear daily score and plan

- Built the AI guidance loop that keeps users consistent

Stackr

02SaaS ROI analytics platform that reveals value, waste, and feature adoption.

- Feature-level usage insights to surface underused licenses

- Finance-ready ROI reporting for procurement and ops

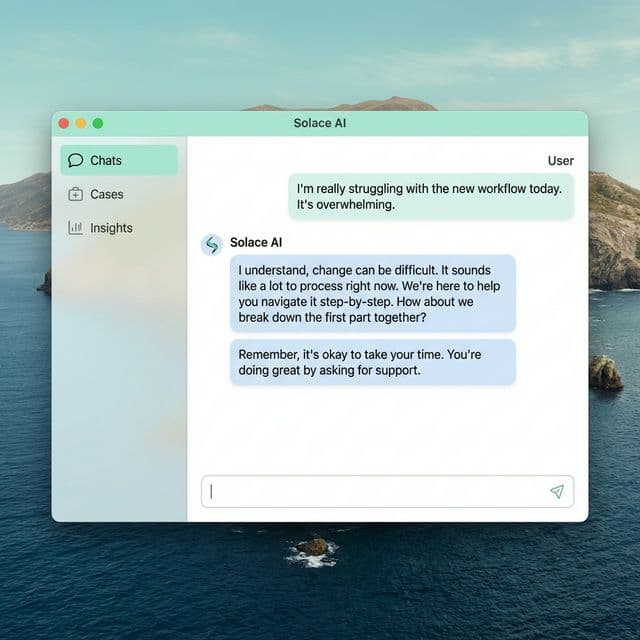

Solace AI

03AI-assisted experience designed to feel supportive and human.

- Supportive AI flows with safety guardrails

- Prompt + eval loops to keep responses grounded

In progress

Unreleased builds

Early explorations and private builds in active development.

FrameForge

01Open-source demo recorder that captures both your screen and your live explanation, so demos feel clear and personal.

- Records you explaining the demo while also recording your workflow in real time

- Built as an open-source alternative to Loom for transparent, customizable demo sharing

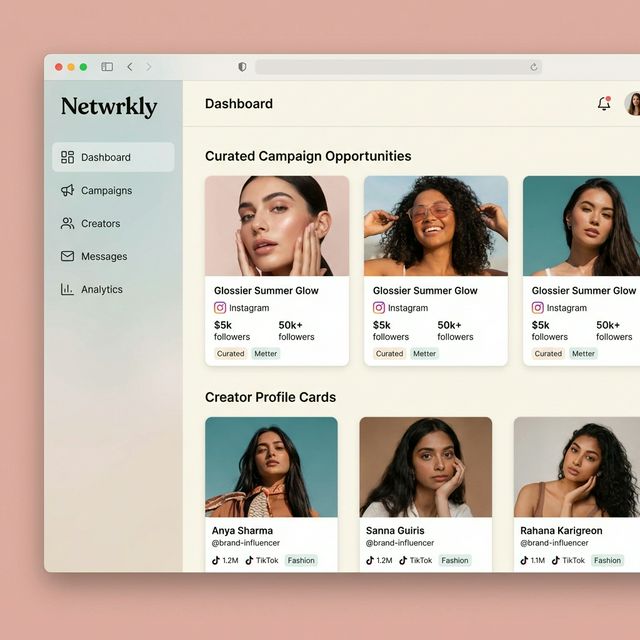

Netwrkly

02Collaboration platform where brands and influencers connect based on campaign fit, requirements, and execution goals.

- Helps influencers discover brand opportunities aligned to audience and content style

- Enables brands to find creators based on campaign requirements and collaboration criteria

PitchPrep

03AI speaking coach that helps users become clearer, more confident communicators across vocabulary, tone, accent, and delivery.

- Improves vocabulary, talking style, tone, and accent through guided practice

- Designed to build confident speakers for interviews, presentations, and high-stakes conversations

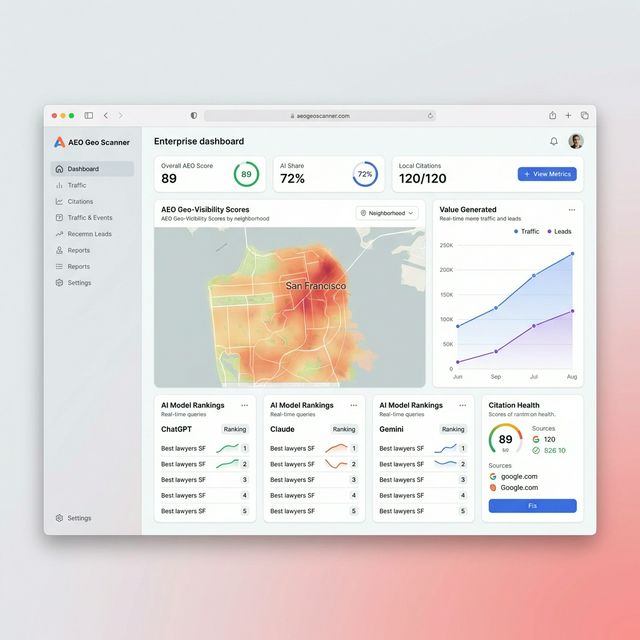

AEO Geo Scanner

04Visibility engine built to improve discoverability in AI answer engines while also strengthening search ranking performance.

- Boosts ChatGPT, Claude, and Gemini answer-engine presence by generating stronger citation opportunities

- Improves SEO posture with structured optimization signals and geo-aware visibility insights

Contact

Let's talk.

YC founders: I join as a founding engineer or product builder to ship fast, then scale clean.

Available for full-time, contract, or advisory.